Data Transformers Podcast

What is Responsible AI and Ethical AI?

Play Episode

Pause Episode

Mute/Unmute Episode

Rewind 10 Seconds

1x

Fast Forward 30 seconds

00:00

/

00:28:57

Apple Podcasts

Google Podcasts

Spotify

Stitcher

RSS Feed

Share

Link

Embed

‘

/>

![]()

Apple Podcasts

![]()

Google Podcasts

![]()

Spotify

![]()

Stitcher

Episode Title : What is Responsible AI and Ethical AI?

Episode Summary:

Can Artificial Intelligence help society as much as it helps business? Is this the golden age for AI but only for certain sections of the society and not for all? We need to establish ethical standards in dealing with artificial intelligence – and to answer the question: What still makes us as human beings unique?

Artificial Intelligence (AI) technology poses serious ethical risks to individuals and society. David Van Bruwaene explains how we can deal with these risks more effectively if we approach them using tools from applied philosophy.

Why is there a need for a company on ethical AI? (01:30): There wasn’t much focus on ethics and governance when David started working on AI. Most of the work was engineers digging into deep learning and hedge funds looking at sentiment analysis for purchase behavior but not much focus on whether recommendations were proper or not. David thought there needs to be a focus on discussion on the ethical implications of recommendations.

Who defines the fairness of AI (08:00): Finance deals with model risk management is procedurally handled especially with what is laid out by governments. Similarly, bias risk can be handled by rigorous documentation, reproducibility, auditability. Example of gaps in resumes and not considering gaps because of pregnancy for example. First degree is identifying risks with data scientists.

Who is accountable for Responsible AI?(11:30): Board should be ultimately accountable. . Need separate test data sets & Challenger data models. Model risk report should be an outcome. Companies should have Chief Model Risk officer.

Applicability of Fairly AI framework (13:00): Framework is horizontal. Problem is budget and willingness. Different departments will also have risk levels. Example Marketing is considered low risk whereas loan departments will be considered high risk.

What can you do with historical Bias in Data (17:00): There may be technical fixes but they could be costly and time consuming. First look at data pre-processing steps. The better way to deal with is in-prcoessing steps of removing information about protected status.

Should there be more regulations in AI (22:00): Regulations come when business fails. All big companies are investing in Responsible AI.

AI implementation and success in AI (25:00): Financial institutions are investing heavily. Insurance and healthcare are also investing. There are plenty of roadblocks starting with training data. Access of training data to outside consultants when they are brought in.

USEFUL LINKS

Twitter:

https://twitter.com/DataTransforme2

https://twitter.com/peggy_tsai

LinkedIn:

https://www.linkedin.com/in/davidvanbruwaene/

https://www.linkedin.com/company/69241922

Data Transformers Podcast

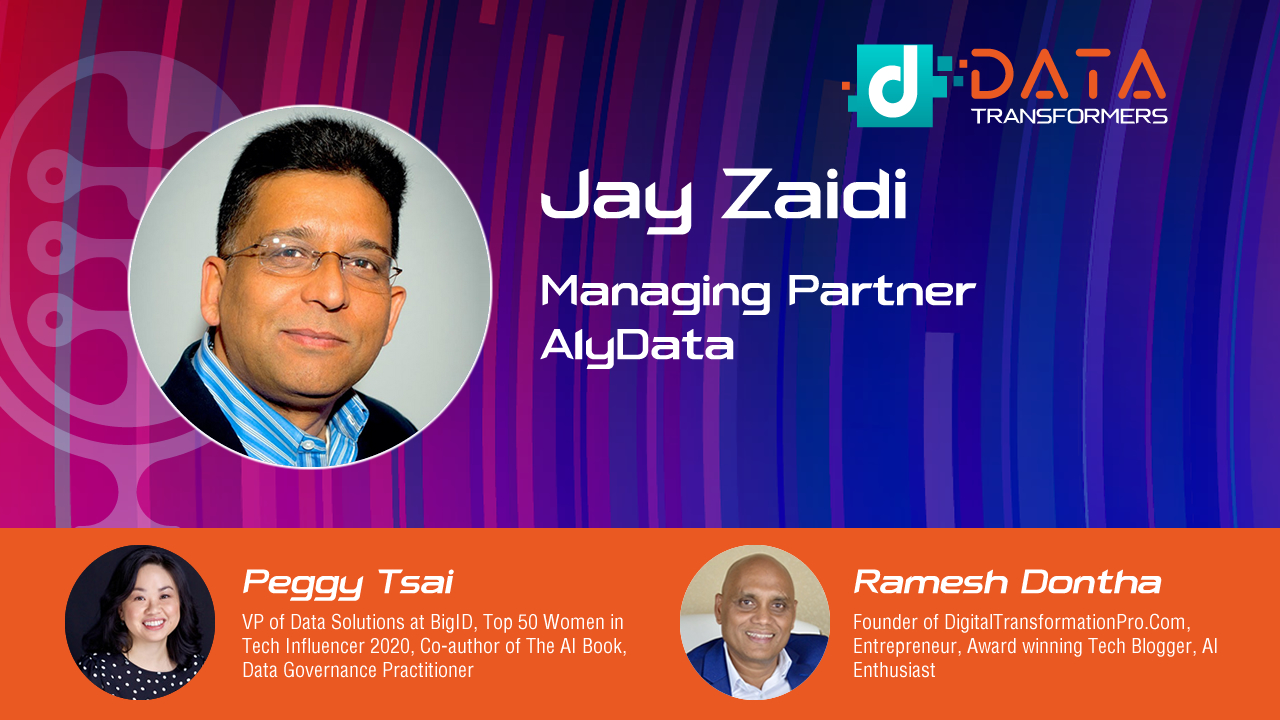

Join Peggy and Ramesh as they explore the exciting world of Data Management, Data Analytics, Data Governance, Data Privacy, Data Security, Artificial Intelligence, Cloud Computing, Internet Of Things.

Data Transformers Podcast Five Types of Thinking for High Performing Data Scientists Play Episode Pause Episode Mute/Unmute Episode Rewind 10 Seconds 1x Fast Forward...

Data Transformers Podcast How to build and scale a data advisory business with Jay Zaidi 1x 00:00 / 00:27:33 Subscribe Share Apple Podcasts Google...

Data Transformers Podcast The Future of Women in AI is bright according to The Data Leader of The year, Adita Karkera Play Episode Pause...